This page is a chronological list of noteworthy MIRI media appearances, press coverage, talks, and writings since our strategy pivot in early 2023. We’ve marked the entries we expect to be the most useful to check out with a star (⭐).

January 2024

In a new report, Peter Barnett and Jeremy Gillen argue that AI systems capable of large scale scientific research will likely pursue unwanted goals, with catastrophic results.

December 2023

Malo Bourgon is interviewed on AI x-risk and MIRI’s policy recommendations.

Tech Policy Press — “US Senate AI ‘Insight Forum’ Tracker.”

Press coverage of the AI Insight Forum. The relevant section is on Forum Eight. A choice quote:

[…] The eighth forum, focused on ‘doomsday scenarios,’ was divided into two parts, with the first hour dedicated to AI risks and the second on solutions for risk mitigation. It started with a question posed by Sen. Schumer, asking the seventeen participants to state their respective “p(doom)” and “p(hope)” probabilities for artificial general intelligence (AGI) in thirty seconds or less.

Some said it was ‘surreal’ and ‘mind boggling’ that p(dooms) were brought up in a Senate forum. “It’s encouraging that the conversation is happening, that we’re actually taking things seriously enough to be talking about that kind of topic here,” Malo Bourgon, CEO of the Machine Intelligence Research Institute (MIRI), told Tech Policy Press. […]

Washington Examiner — “‘Doomsday’ artificial intelligence: Schumer and Congress study existential risks.”

A short piece on the “Risk, Alignment, and Guarding Against Doomsday Scenarios” Senate forum.

AI Insight Forum — “Written statement of MIRI CEO Malo Bourgon.”

Malo Bourgon was one of sixteen people invited by U.S. Senate Majority Leader Chuck Schumer and Senators Todd Young, Mike Rounds, and Martin Heinrich to give written statements at the Eighth Bipartisan Senate Forum on Artificial Intelligence.

In our statement, we explain AI existential risk and propose a series of steps the US can take today to help address this risk, which we classify as “Domestic AI Regulation”, “Global AI Coalition”, and “Governing Computing Hardware”. As of April 2024, this continues to be MIRI’s primary resource for outlining our policy proposals.

November 2023

Nate Soares argues that “It’s no surprise that the AI falls down on various long-horizon tasks and that it doesn’t seem all that well-modeled as having ‘wants/desires’; these are two sides of the same coin.”

As AI improves on long-horizon tasks, Soares argues that we should expect AI to “cause some particular outcome across a wide array of starting setups and despite a wide variety of obstacles”, which introduces the classic risks of misaligned AI.

October 2023

Nate Soares and Matthew Gray review the UK government’s AI Safety Policy asks (including some glaring omissions), and review the submitted AI Safety Policies of seven participating organizations.

Soares ranks the organizations’ policies: “Anthropic > OpenAI >> DeepMind > Microsoft >> Amazon >> Meta”.

Nate Soares discusses three major hurdles humanity faces when it comes to “AI as a science”:

- Most research that helps one point AIs, probably also helps one make more capable AIs. A “science of AI” would probably increase the power of AI far sooner than it allows us to solve alignment.

- In a world without a mature science of AI, building a bureaucracy that reliably distinguishes real solutions from fake ones is prohibitively difficult.

- Fundamentally, for at least some aspects of system design, we’ll need to rely on a theory of cognition working on the first high-stakes real-world attempt.

July 2023

Conversations with Coleman — “Will AI Destroy Us?”

Coleman Hughes hosts a roundtable discussion between Eliezer Yudkowsky, Scott Aaronson, and Gary Marcus.

Bankless — “Tackling the Alignment Problem.”

Nate Soares discusses existential risk from AI.

Hold These Truths — “Can We Stop the AI Apocalypse?”

A discussion of AI x-risk between Eliezer Yudkowsky and Dan Crenshaw.

The Spectator — “Will AI Kill Us?”

Yudkowsky appears on a segment of The Week in 60 Minutes to discuss why superintelligent AI is a massive extinction risk.

Bloomberg AI IRL — “The Only Winner of an AI Arms Race Will Be AI.”

Eliezer Yudkowsky outlines the reasoning behind AI “doom” concerns.

Eliezer Yudkowsky discusses AGI ruin scenarios in a mini-essay, alongside short essays by Max Tegmark, Brittany Smith, Ajeya Cotra, and Yoshua Bengio.

The David Pakman Show — “AI will kill us all.”

A short but very solid conversation with Yudkowsky on “the most dangerous thing we can’t imagine”. The discussion focuses on “What is the mechanism through which AI could exert control over the physical world?”, and on the implications of this scenario. Some quotes from Yudkowsky:

- “It’s something that has its own plans. You don’t get to just plan how to use it; it is planning how to use itself.”

- “I think my basic factual point of view is that if we do not somehow avoid doing this, we’re all going to die. And my corresponding policy view is that we should not do this, even if that’s really quite hard.”

May 2023

Econtalk — “Eliezer Yudkowsky on the Dangers of AI.”

Yudkowsky and Russ Roberts debate whether AI poses an existential risk.

The Logan Bartlett Show — “Eliezer Yudkowsky on if Humanity can Survive AI.”

In possibly the best multi-hour video introduction to AI risk, Logan Bartlett and Eliezer Yudkowsky thoroughly and deeply talk about the shape of the risk.

The Fox News Rundown — “Extra: Why A Renowned A.I. Expert Says We May Be ‘Headed For A Catastrophe.’”

A podcast interview with Eliezer Yudkowsky, aimed at a general audience that wants to know the basics.

April 2023

MIRI Blog — “The basic reasons I expect AGI ruin.”

Rob Bensinger discusses the ten core reasons the situation with AI looks lethally dangerous to him, from likely capability advantages of STEM-capable AGI to alignment challenges.

Center for the Future Mind — “Is Artificial General Intelligence too Dangerous to Build?”

A live Florida Atlantic University Q&A session with Eliezer Yudkowsky.

TED2023 — “Will superintelligent AI end the world?”

In a TED Talk, Eliezer Yudkowsky makes a very concise version of the case for stopping progress toward smarter-than-human AI.

MIRI Blog — “Misgeneralization as a misnomer.”

Nate Soares argues that “misgeneralization” is a misleading way to think about many AI alignment difficulties. E.g.:

[I]t’s not that primates were optimizing for fitness in the environment, and then “misgeneralized” after they found themselves in a broader environment full of junk food and condoms. The “aligned” behavior “in training” broke in the broader context of “deployment”, but not because the primates found some weird way to extend an existing “inclusive genetic fitness” concept to a wider domain. Their optimization just wasn’t connected to an internal representation of “inclusive genetic fitness” in the first place.

Dwarkesh Podcast — “Why AI Will Kill Us, Aligning LLMs, Nature of Intelligence, SciFi, & Rationality.”

Dwarkesh Patel interviews Eliezer Yudkowsky on AGI risk. Some good discussion ensues, though this isn’t the best first introduction to the topic.

March 2023

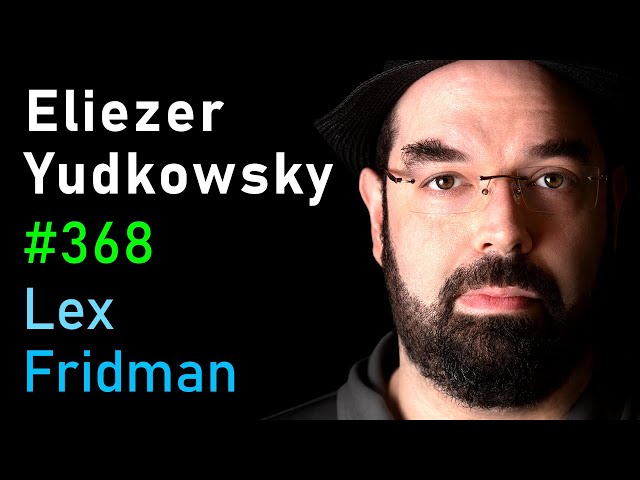

Lex Fridman Podcast — “Dangers of AI and the End of Human Civilization.”

Lex Fridman discusses a wide range of topics with Eliezer Yudkowsky.

TIME Magazine — “Pausing AI Developments Isn’t Enough. We Need to Shut it All Down.”

Eliezer Yudkowsky pens an op-ed arguing for an international ban on frontier AI development. (Mirror with addenda.)

MIRI Blog — “Deep Deceptiveness.”

Nate Soares discusses a hypothetical example of AI deceptiveness, to illustrate why issues like deceptiveness can be hard to root out.

MIRI Blog — “Comments on OpenAI’s ‘Planning for AGI and beyond.’”

Nate Soares shares his thoughts on an OpenAI strategy blog post by Sam Altman.

February 2023

Bankless Podcast — “We’re All Gonna Die.”

Eliezer Yudkowsky explains the basics of AI risk in a long-form interview. (Transcript.)

Nate Soares writes up some general advice for people who want to seriously tackle existential risk.