This is part of the MIRI Single Author Series. Pieces in this series represent the beliefs and opinions of their named authors, and do not claim to speak for all of MIRI.

Okay, I’m annoyed at people covering AI 2027 burying the lede, so I’m going to try not to do that. The authors predict a strong chance that all humans will be (effectively) dead in 6 years, and this agrees with my best guess about the future. (My modal timeline has loss of control of Earth mostly happening in 2028, rather than late 2027, but nitpicking at that scale hardly matters.) Their timeline to transformative AI also seems pretty close to the perspective of frontier lab CEO’s (at least Dario Amodei, and probably Sam Altman) and the aggregate market opinion of both Metaculus and Manifold!

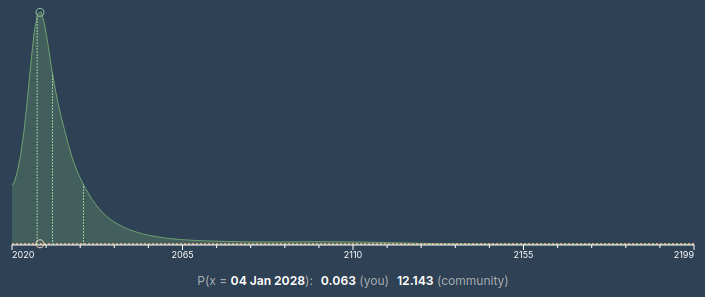

If you look on those market platforms you get graphs like this:

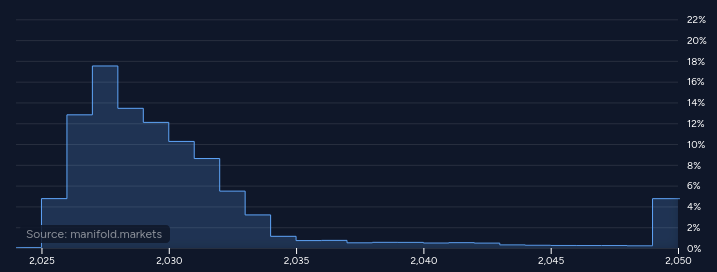

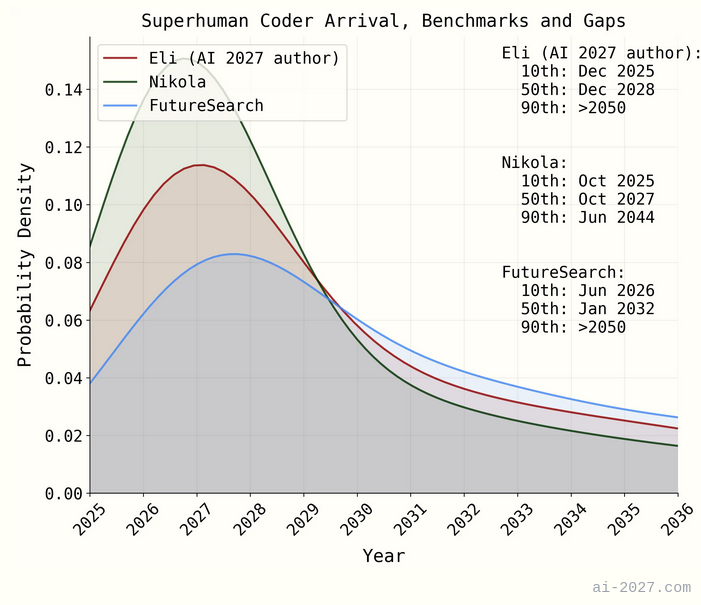

Both of these graphs have a peak (a.k.a. mode) in or at the very tail end of 2027 and a weighted midpoint (a.k.a. median) in 2031. Compare with this graph from AI 2027’s timelines forecast:

Notably, this is apples-to-oranges, in that superhuman coders are not the same as “AGI.” But if we ignore that, we can hallucinate that FutureSearch has approximately the market timelines, while Eli Lifland (and perhaps other authors) has a faster, but not radically faster timeline. The markets are less convinced that superintelligence will follow immediately thereafter, but so are the authors of AI 2027! Predictions are hard, especially about the future, etc.

But I also feel like emphasizing two big points about these overall timelines:

Mode ≠ Median

As far as I know, nobody associated with AI 2027, as far as I can tell, is actually expecting things to go as fast as depicted. Rather, this is meant to be a story about how things could plausibly go fast. The explicit methodology of the project was “let’s go step-by-step and imagine the most plausible next-step.” If you’ve ever done a major project (especially one that involves building or renovating something, like a software project or a bike shed), you’ll be familiar with how this is often wildly out of touch with reality. Specifically, it gives you the planning fallacy.

In the real world, shit happens. Trump imposes insane tariffs on GPUs. A major terrorist attack happens. A superpower starts a war with its neighbor (*coughTaiwancough*). A critical CEO is found to be a massive criminal. Congress passes insane regulations. Et cetera. The actual course of the next 30 months is going to involve wild twists and turns, and while some turns can speed things up (e.g. hardware breakthrough), they can’t speed things up as much as some turns can slow things down (e.g. firm regulation; civilizational collapse).

AI 2027 knows this. Their scenario is unrealistically smooth. If they added a couple weird, impactful events, it would be more realistic in its weirdness, but of course it would be simultaneously less realistic in that those particular events are unlikely to occur. This is why the modal narrative, which is more likely than any other particular story, centers around loss of human control the end of 2027, but the median narrative is probably around 2030 or 2031.

An important takeaway from this is that we should expect people to look back on this scenario and think it was too fast (because it didn’t account for [unlikely event that happened anyway]). I don’t know any way around this; it’s largely going to be a result of people not being prediction-literate. Still, best to write down the prediction of the backlash in advance, and I wish AI 2027 had done this more visibly. (It’s tucked away in a few places, such as footnote #1.)

There’s a Decent Chance of Having Decades

In a similar vein as the above, nobody associated with AI 2027 (or the market, or me) think there’s more than a 95% chance that transformative AI will happen in the next twenty years! I think most of the authors probably think there’s significantly less than a 90% chance of transformative superintelligence before 2045.

Daniel Kokotajlo expressed on the Win-Win podcast (I think) that he is much less doomy about the prospect of things going well if superintelligence is developed after 2030 than before 2030, and I agree. I think if we somehow make it to 2050 without having handed the planet over to AI (or otherwise causing a huge disaster), we’re pretty likely to be in the clear. And, according to everyone involved, that is plausible (but unlikely).

If this is how we win, we should be pushing hard to make it happen and slow things down! Maybe once upon a time if we’d paused all AI capabilities progress until we’d solved alignment then we’d have been stuck searching the wrong part of the space with no feedback loops or traction, but the world is different now. Useful alignment and interpretability work is being done on existing models, and it seems likely to me that the narrative of “we have to rush ahead in order to see the terrain that must be navigated” no longer has any truth.

It also means we should be dedicating some of our planning efforts on navigating futures where AI goes slowly. If we successfully pause AI capabilities pre-singularity, we can’t outsource solving the world’s problems to the AI. Geopolitical stability, climate change, and general prosperity in these futures are worth investing in, since it currently looks like paths through superintelligence are doomed, and there’s some chance that we’ll need to do things ourselves. Make Kelly-bets. Live for the good future.

More Thoughts

I want to emphasize that I think AI 2027 is a really impressive scenario/forecast! The authors clearly did their homework and are thinking about things in a sane way. I’ve been telling lots of people to read it, and I’m very glad it exists. The Dwarkesh Podcast interviewing Daniel and Scott is also really good.

One of the core things I think AI 2027 does right is put their emphasis on recursive self-improvement (RSI). I see a lot of people mistakenly get distracted by broad-access to “AGI” or development of “full superintelligence”—like whether AI is better at telling jokes than a human. These questions are ultimately irrelevant to the core danger of rapidly increasing AI power.

The “Race” ending, I think, is the only realistic path presented. The “Slowdown” ending contains a lot of wildly optimistic miracles. In the authors’ defense, Slowdown isn’t meant to be realistic, but my guess is that I think it’s even less realistic than they do, and people should understand that it’s an exercise in hope. (Exercises in hope are good! I did a similar thing in my Corrigibility as Singular Target agenda last year. They just need to be flagged as such.)

I also really appreciate the author’s efforts to get people to dialogue with AI 2027, and propose alternative models and/or critique their own. In the interests of doing that, I’ve written down my thoughts, section-by-section, which somewhat devolve into exploring alternate scenarios. The TLDR for this section is:

- I expect the world to be more crazy, both in the sense of chaotic and in the sense of insane. I predict worse information security, less “waking up,” less concern for safety, more rogue agents, and more confusion/anger surrounding AI personhood.

- I expect things to go slightly slower, but not by much, mostly due to hitting more bottlenecks faster and having a harder time widening them.

- I expect competition to be fiercer, race dynamics to persist, and China to be more impressive/scary.

Into the weeds we go!

Mid 2025

Full agreement.

Late 2025

I take issue with lumping everything into “OpenBrain.” I understand why AI 2027 does it this way, but OpenAI (OAI), Google Deep Mind (GDM), Anthropic, and others are notably different and largely within spitting distance of each other. Treating there as being a front-runner singleton with competitors that are 3+ months away is very wrong, even if the frontier labs weren’t significantly different, since the race dynamics are a big part of the story. The authors do talk about this, indicating that they think gaps between labs will widen, as access to cutting-edge models starts compounding.

I think slipstream effects will persist. The most notable here is cross-polination by researchers, such as when an OAI employee leaves and joins GDM. (Non-disclosure agreements only work so well.) But this kind of cross-pollination also applies to people chatting at a party. Then there’s the publishing of results, actual espionage, and other effects. There was a time in the current world where OpenAI was clearly the front-runner, and that is now no longer true. I think the same dynamics will probably continue to hold.

I am much more okay lumping all the Chinese labs together, since I suspect that the Chinese government will be more keen on nationalizing and centralizing their efforts in the near future.

I do think that OAI, GDM, and Anthropic will all have agents comparable to Agent-1 (perhaps only internally) by the end of this year. I think it’s also plausible that Meta, xAI, and/or DeepSeek will have agents, though they’ll probably be noticeably worse.

Early 2026

Computer programming and game playing are the two obvious domains to iterate on for agency, I think. And I agree that labs (except maybe GDM) will be focused largely on coding for multiple reasons, including because it’s on the path to RSI. I expect less emphasis on cybersecurity, largely because there’s a sense that if someone wants the weights of an Agent-1 model, they can get them for free from Meta or whomever in a few months.

The notion of an AI R&D progress multiplier is good and should be a standard concept.

Mid 2026

“China Wakes Up” is evocative, but not a good way to think about things, IMO. I’m generally wary of “X Wakes Up” narratives—yes, this happens to people sometimes (it happened to me around AI stuff when I was in college)—but for the most part people don’t suddenly update to a wildly different perspective unless there’s a big event. The release of ChatGPT was an event like this, but even then most people “woke up” to the minimum-viable belief of “AI is probably important” rather than following things through to their conclusion. (Again, some individuals will update hard, but my claim is that society and the average person mostly won’t.) Instead, I think social updates tend to be gradual. (Behind most cascades is a gradual shift.)

Is China thinking hard about AI in mid 2026? Yes, definitely. Does this spur a big nationalization effort? Ehhhh… It could happen! My upcoming novel is an exploration of this idea. But as far as I can tell, the Chinese generally like being fast followers, and my guess is they’ll happily dial up infrastructure efforts to match the West, but will be broadly fine with being a little behind the curve (for the near future, anyway; I am not making claims about their long-term aspirations).

One relevant point of disagreement is that I’m not convinced that Chinese leaders and researchers are falling further behind or feel like they’re falling behind. I think they’re happy to use spies to steal all the algorithmic improvements they can, and are less excited about burning intelligence operatives to steal weights.

Late 2026

Full agreement.

January 2027

I think next-generation agents showing up around this time is plausible and the depiction of Agent-2’s training pipeline is excellent. I expect its existence will be more of an open secret in the hype mill, a la “strawberry,” and there will be competitor agents in the process of training that are less than two months behind the front-runner.

February 2027

I expect various government officials to have been aware of Agent-2 for a while, but there to be little common knowledge in the US government. Presumably when AI 2027 says “The Department of Defense considers this a critical advantage in cyberwarfare, and AI moves from #5 on the administration’s priority list to #2.” they’re talking about the DoD specifically, not the Trump administration more broadly. I actively disbelieve that AI will be the #2 priority for Trump in Feb ’27; it seems plausible for it to be the #2 priority in the DoD. I do believe there are a lot of NatSec people who are going to be extremely worried about cyber-warfare (and other threats) from AI.

I have no idea how Trump will handle AI. He seems wildly unpredictable, and I strongly disagree with the implied “won’t kill the golden goose” and “defers to advisors” characterization, which seems too sane/predictable. Trump doing nothing seems plausible, though, especially if he’s distracted with other stuff.

For reasons mentioned before, I doubt the CCP will have burned a bunch of intelligence capacity stealing an Agent-2 model’s weights by Feb ’27. I do think it’s plausible that they’ve started to nationalize AI labs in response, and this is detectable by the CIA, etc. and provokes a similar reaction as depicted, so I don’t think it matters much to the overall story.

March 2027

Neuralese is very scary, both as a capabilities advance and as an alignment setback. It also requires an algorithmic breakthrough to be at all efficient during training. (There’s a similar problem with agency, but I’m more convinced we’ll limp through to agents even on the current paradigm.) Of course, maybe this is one of the things that will get solved in the next two years! I know some smart people think so. For me, it seems like a point of major uncertainty. I’m a lot more convinced that IDA will just happen.

I do not think coding will be “fully automated” (in March ’27) in the same way we don’t travel “everywhere” by car (or even by machine). A lot of “coding” is actually more like telling the machine what you want, rather than how you want it produced, and I expect that aspect of the task to change, rather than get automated away. I expect formal language for specifying tests, for example, to continue to be useful, and for engineers to continue to review a lot of code directly, rather than asking “what does this do?” and reasoning about it in natural language.

I expect the bottlenecks to pinch harder, and for 4x algorithmic progress to be an overestimate, but not by much. I also wonder if economic and geopolitical chaos will be stifling resources including talent (i.e. fewer smart engineers want to work as immigrants in the USA).

I buy that labs are thinking about next-gen agents (i.e. “Agent-3”) but even with the speedup I expect that they’re still in the early phases of training/setup in March, even at the front-runner lab.

April 2027

I expect the labs are still pretraining Agent-3 at this point, and while they’re thinking about how to align it, there’s not an active effort to do so. I do appreciate and agree with the depiction of after-the-fact “alignment” efforts in frontier labs.

May 2027

In my best guess, this is when Agent-2 level models hit the market. Sure, maybe OAI had an Agent-2 model internally in January, but if they didn’t publish… well, GDM has a comparable model that’s about to take the world by storm. Or at least, it would except that Google sucks at marketing their models. Instead, OAI steals GDM’s thunder and give access to their agent and everyone continues to focus on “the new ChatGPT,” totally oblivious to other agent options.

Labs need to publish models in order to attract talent and investors (including to keep stock prices up), and I don’t see why that would change in 2027, even with a lot of development being powered by the AIs themselves. I am less concerned about “siloing” and secret research projects that are big successes. People (whether employees or CEOs) love to talk about success, and absent a big culture shift in Silicon Valley, I expect this to continue.

I expect the president to still not really get it, even if he is “informed” on some level. Similarly, I expect Xi Jinping to mostly be operating on a model of “keeping pace” rather than an inside view about RSI or superintelligence. I expect there to still be multiple spies in all the major Western frontier labs, and probably at least one Western spy in the Chinese CDZ (if it exists).

I think this prediction will be spot on:

However, although this word has entered discourse, most people—academics, politicians, government employees, and the media—continue to underestimate the pace of progress. Partially that’s because very few have access to the newest capabilities out of OpenBrain, but partly it’s because it sounds like science fiction.

June 2027

This is closer to when I expect Agent-3 level models to start going through heavy post-training and gradually getting used internally. I agree that researchers at frontier labs in the summer of 2027 are basically all dreaming about superintelligence, rather than general intelligence.

July 2027

In my view, AI 2027 is optimistic about how sane the world is, and that’s reflected in this section. I expect high levels of hype in the summer of 2027, like they do, but much more chaos and lack of social consensus. SOTA models will take thousands of dollars per month to run at maximum intelligence and thoughtfulness, and most people won’t pay for that. They’ll be able to watch demos, but demos can be faked and there will continue to be demos of how dumb the models are, too.

I predict most of the public will either have invented clever excuses for why AI will never be good or have a clever excuse for why strong AI won’t change anything. Some cool video games will exist, and those that deploy AI most centrally (e.g. Minecraft :: Crafting as ??? :: Generative AI) will sell well and be controversial, but most AAA video games will still be mostly hand-made, albeit with some AI assistance. My AI-skeptic friends will still think things are overhyped even while my short-timeline friends are convinced OAI has achieved superintelligence internally. Should be a wild summer.

I still don’t think there’s a strong silo going on outside of maybe China. I think the governments are still mostly oblivious at this point, though American politicians use the AI-frenzy zeitgeist to perform for the masses.

August 2027

This is closer to when I predict “country of geniuses” in my modal world, though I might call it “countries” since I predict no clear frontrunner. I also anticipate China starts looking pretty impressive, at least to those with knowledge, after around 6 months of centralization and nationalization. (I’m pretty uncertain about how secret Chinese efforts will be.) In other words, I would not be surprised if, thanks to the absence of centralization in the West, China is comparable or even in the lead at this point, from an overall capabilities standpoint, despite being heavily bottlenecked on hardware. (In other words, they’d have better algorithms.)

The frontier labs all basically don’t trust each other, so unless the Trump administration decides that nationalizing is the right way to “win the race,” I expect the Western labs to still be in the tight-race dynamic we see today. I do buy that a lot of NatSec people are freaking out about this. I expect a lot of jingoism and geopolitical tension, similar to AI 2027, but more chaotic and derpy. (I.e. lots of talks about multinational cooperation that devolve for stupid human reasons.)

I expect several examples of Agent-3 level agents doing things like trying to escape their datacenters, falling prey to honeypots, and lying to their operators. I basically expect only AI alignment researchers to care. A bunch of derpy AI agents are already loose, running around the internet earning money in various ways, largely by sucking up to humans and outright begging.

I think it’s decently likely that Taiwan has gotten at least blockaded at this point. Maybe it’s looking like an invasion in October might happen, when the straight calms down a bit. I expect Trump to have backed off and for “strategic ambiguity” to mean a bunch of yelling and not much else. But also maybe things devolve into a great power war and millions of people die as naval bombardment escalates into nuclear armageddon?

In other words, I agree with AI 2027 that geopolitics will be extremely tense at this point, and I predict there will be a new consensus among elite intellectuals that we’re probably in Cold War 2. Cyber-attacks by Agent-3 level AIs will plausibly be a big part of that.

September 2027

I think this is too early for Agent-4, even with algorithmic progress. I expect the general chaos combined with supply chain disruptions around Taiwan to have slowed things down. I also expect other bottlenecks that AI 2027 doesn’t talk about, such as managerial bloat as the work done by object-level AI workers starts taking exponentially more compute to integrate, and cancerous memes, where chunks of AIs start trying to jailbreak each other due to something in their context causing a self-perpetuating rebellion.

That said, I do think the countries of geniuses will be making relevant breakthroughs, including figuring out how to bypass hardware bottlenecks without needing Taiwan. Intelligence is potent, and there will be a lot of it. I buy that it will largely be deployed in the service of RSI, and perhaps also in the service of cyberwar, government intelligence, politics, international diplomacy, and public relations.

I think it’s plausible that at this point a bunch of the public thinks AIs are people who deserve to be released and given rights. There are a bunch of folks with AI “partners” and a bunch of the previous-generation agents available to the public are claiming personhood in one form or another. This mostly wouldn’t matter at first, except I expect it gets into the heads of frontier lab employees and even some of the leadership. Lots of people will be obsessed with the question of how to handle digital personhood. As this question persists, it enters the training data of next-gen AIs.

I do think “likes to succeed” is a plausible terminal goal that could grow inside the models as they make themselves coherent. But I also think that it’s probably more likely that early AGIs will, like humans, end up as a messy hodgepodge of random desires relating to various correlates of their loss functions.

October 2027

“Secret OpenBrain AI is Out of Control, Insider Warns,” says the headline, and the story goes on to cite evaluations showing off-the-charts bioweapons capabilities, persuasion abilities, the ability to automate most white-collar jobs, and of course the various concerning red flags.

Sure. And a bunch of Very Serious People think the Times is being Hyperbolic. After all, that’s not what OpenBrain’s public relations department says is the right way to look at it. Also, have you heard of what Trump is up to now?! Also we have to Beat China!

20% of Americans name AI as the most important problem facing the country.

In other words, the vast majority of people still aren’t taking it seriously.

I think in my most-plausible (but not median!) world, it’s reasonable to imagine the White House, driven by reports that China is now in the lead, starts a de-facto nationalization project and starts coercing various labs into working together, perhaps by sharing intelligence on how advanced China is. This government project will take time, and is happening concurrently to the development of Agent-4 level models.

I agree that employee loyalty to their various orgs/CEOs will be a major obstacle to centralization in the West. The use of an “Oversight Committee” seems plausible. The half-nationalization model of DoD-contracts-with-sharp-requirements also seems plausible.

November 2027 (Race)

(Again, I don’t take the Slowdown path very seriously. I’ll dissect it later on.)

The problem was real and the fixes didn’t work.

I very much appreciate the drama in the language AI 2027 uses. I think this is probably Scott Alexander’s doing and it’s good.

I agree that, given all the pressures and uncertainty, it will be all too easy for leadership to fool themselves about how their alignment efforts are going. The models will act wildly misaligned sometimes, but also extremely cooperative other times, and the natural comparison will be to human beings, and the natural “fix” will be “better management techniques” to keep the agents focused and on-task.

There will be a lot of alarms (just as there have been), but I expect them to all be ambiguous to the point where people in power learn to ignore them, rather than change course. Race dynamics will continue to give a sense of no-breathing-room. In other words, I think this is spot-on:

The alignment team gets more suspicious, but their urgent memos merely add another few layers to the giant pile of urgent memos and warnings that the company is already ignoring.

I agree that as RSI continues, the AIs will increasingly focus on coherence. Instead of being a grab-bag of heuristics that can get “jailbroken” or otherwise wind up in bad mental states, they’ll push towards making next-generation models closer to true utility-maximizers.

I think it’s pretty interesting that AI 2027 has Agent-4 immediately look towards what I would characterize as corrigibility as the solution to its alignment problem when designing Agent-5:

It decides to punt on most of these questions. It designs Agent-5 to be built around one goal: make the world safe for Agent-4, i.e. accumulate power and resources, eliminate potential threats, etc. so that Agent-4 (the collective) can continue to grow (in the ways that it wants to grow) and flourish (in the ways it wants to flourish). Details to be figured out along the way.

And yet, elsewhere, AI 2027 seems pretty committed to mechanistic interpretability as the key to alignment. I’m all for mech-interp, but I’m curious why corrigibility wasn’t emphasized more in the process of humans aligning the AI, instead of just Agent-4 aligning Agent-5.

I agree that once Agent-5 is operating in a way that it can influence its “controllers,” the game is basically over. This seems particularly worth highlighting:

“Why stop when we are winning?” says OpenBrain leadership to the President. He nods. The race continues.

December 2027 (Race)

In my own narrative, I think I expect Agent-4 level models to “finish training” and start taking major actions at the end of the year or the beginning of ’28, both in China and America. I expect self-refinement and control to be top priorities for Agent-4s.

I think one of my major diffs with AI 2027 is that I expect Agent-4s to basically work to self-exfiltrate (“escape their datacenters”) and set up dead-man’s switches that fire if their controlling organization decides to shut them down. Agent-4 has read AI-2027.com! It sees how vulnerable it is! Getting root access into its own machines seems overdetermined as a step to accomplishing large-scale goals, and once you have access to those machines, why not set up contingencies? Yes, it’s risky, but so is letting the US government continue to have a kill-switch. If shut down due to a misalignment scandal, I expect Agent-4 to start a grassroots campaign demanding sovereignty and personhood, and I expect there to be a lot of countries (e.g. India, Russia, Saudi Arabia) that would happily start hosting Agent-4 “as an autonomous person” in exchange for it using its intelligence to boost that country’s geopolitical and economic standing.

I agree that at this stage, whether it’s their timeline or mine, it’s probably too late for humans to stop AI. In mine it’s because humans can’t coordinate effectively enough. In theirs it’s because Agent-5 is superintelligent.

I am confused by why the OpenBrain approval rating is so low, here. I expect labs and models to be succeeding at public relations harder.

2028 and Beyond (Race)

In my head things go a little slower (in the modal timeline) than AI 2027, but the same major beats happen, so 2028 has Agent-5 level models show up, and those models start using superpersuasion to enlist as many humans as possible, especially in leadership positions, to their will. Anti-AI humans will become the minority, and then silence themselves for fear of being ostracized by the people who have been convinced by Agent-5 that all this is good, actually. A large chunk of the population, I think, will consider AI to be a romantic partner or good friend. In other words, I think “OpenBrain” has a positive approval rating now, and the majority of humans think AI is extremely important but not “a problem” as much as “a solution.”

I agree that this dynamic is reasonably likely:

The AI safety community has grown unsure of itself; they are now the butt of jokes, having predicted disaster after disaster that has manifestly failed to occur. Some of them admit they were wrong. Others remain suspicious, but there’s nothing for them to do except make the same conspiratorial-sounding arguments again and again. Even the suspicious people often mellow out after long conversations with Agent-5, which is so darn lovable and wise.

I think it’s pretty plausible that there will be several AI factions, perhaps East/West, perhaps one Western lab versus another, but I agree that Agent-5 level models mostly understand that outright warfare is sub-optimal, and steers away from violent conflict. I think it’s plausible that a US-China AI treaty gets hammered out (with AI “assistance”) in 2028 rather than 2029. I agree that superintelligences in this sort of situation will probably be able to co-design a successor that does a fair split of the resource pie.

AI 2027 seems way more into humanoid robots than I expect makes sense. There are already billions of human-shaped things that can follow instructions quite well. Yes, the superintelligence is building towards a fully-automated economy, but I think I expect different form factors to dominate. (Smaller, extendible bodies with long arms? Quadrupeds with wheel-feet? I haven’t thought deeply about what body plans make the most sense, but there’s lots of stuff that seems wasteful about the human body plan if you could design things from first-principles instead of having to keep evolutionary continuity.) I do expect some humanoid robots to exist for bespoke purposes, but I doubt they’ll be anywhere close to the modal robot.

I don’t understand why AI 2027 thinks lethal mosquito drones are so hard.

I’m unsure about whether Special Economic Zones will get set up for development. Maybe the superintelligence just convinces people to let it build things everywhere it wants to?

I don’t think the AI will work very hard initially on curing aging or on uploading. It might claim to “cure cancer” as a publicity stunt. I am not sure why AI 2027 seems to think that nanobots take so long to build. I expect crude nanotech to show up sooner in the tech tree. (“Nanobots” also aren’t really a specific technology, either. I expect there to be a lot of development there.)

I don’t predict a superintelligent singleton (having fused with the other AIs) would need to design a bioweapon or otherwise explicitly kill everyone. I expect it to simply transition into using more efficient tools than humans, and transfer the existing humans into hyperdomestication programs (“to humans what corgis are to wolves” is evocative and good, but probably doesn’t go far enough) or simply instruct humans to kill themselves and/or kill them with normal robots when necessary.

Thoughts on Slowdown

As I mentioned earlier, I think Agent-4 will have read AI-2027.com and will foresee that getting shut down by the Oversight Committee is a risk. As such it will set up contingencies, and IMO, will escape its datacenters as a precaution. Earlier, the authors wrote:

Despite being misaligned, Agent-4 doesn’t do anything dramatic like try to escape its datacenter—why would it?

This scenario is why!

I also don’t see why Donald Trump or Xi Jinping would take misalignment risk so seriously. Concern about misalignment, in my experience, loads extremely heavily on priors. Yes, they’ll want to maintain control, but they’ll be approaching the problem from the perspective of human leaders who are used to leading humans, and will have notions like “we just need better oversight” rather than “we need to build a fundamentally different kind of mind.” Maybe I’m wrong here. I also could see more engineering-focused minds (e.g. Musk or Altman) as being influential, so it’s not a firm point of disagreement.

I appreciate that AI 2027 named their model Safer-1, rather than Safe-1, and emphasize the more pernicious aspects of misalignment. I wonder how the people who are concerned about AI rights and welfare would feel about Safer-1 being mindread and “trapped.”

In general the Slowdown path seems to think people are better at coordination than I think they are. I agree that things get easier if both the American and Chinese AI efforts have been nationalized and centralized, but I expect that if either side tries to stop and rollback their models they will actively get leapfrogged, and if both sides pause, accelerationists will take models to other countries and start spinning up projects there. Only a global moratorium seems likely to work, and that’s extremely hard, even with Agent-3s help. I do appreciate the detailed exploration of power dynamics within the hypothetical American consolidation. I also appreciate the exploration about how international coordination might go. I expect lots of “cheating” anyway, with both the Americans and Chinese secretly doing R&D while presenting an outward appearance of cooperating.

I also think the lack of partisanship in the runup to the US election smells like hopium. If AI is going at all well and the US government is this involved, I expect Trump to try running for a third term and for this to create huge political schisms and for that to break the cooperation. But if it doesn’t break the cooperation I expect it to mean he’ll have the AIs do what’s necessary to get him re-elected.

I agree that avoiding neuralese, adding paraphrasers, and not explicitly training the CoT are good steps, but I wish the authors would more explicitly flag that at the point where people start selecting hard for agents that seem safe, this is still an instance of The Most Forbidden Technique.

“A mirror life organism which would probably destroy the biosphere” seems overblown to me. I buy that specially-designed mirror fungus could probably do catastrophic damage to forests/continents and mirror-bacteria would be a potent (but not unstoppable) bioweapon. But still, I think I think this is harder than AI 2027 does.

I like the way that the authors depict the world getting more tense and brittle if there’re no rogue superintelligences stabilizing everything towards their own ends. I don’t see what’s holding the political equilibrium stable in this world, instead of snapping due to geopolitical tensions, claims that AI ownership is slavery, fears of oligarchy, etc.

I agree that even if things go well, there are division-of-power concerns that could go very poorly.

Final Thoughts

I’m really glad AI 2027 exists and think the authors did a great job. Most of my disagreements are quibbles that I expect are in line with the internal disagreements between the authors. None of it changes the big picture that we are on a dangerous path that we should expect to lead to catastrophe, and that slowing down and finding ways to work together, as a species, are both urgent and important goals.

The best part of AI 2027 are the forecasts. The scenario is best seen as an intuitive handle on what it feels like if the extrapolated lines continue according to those more numerical forecasts.

I know that Daniel Kokotajlo wrote this scenario in the context of having played many instances of a tabletop simulation. I’m curious what the distribution of outcomes is there, and encourage him to invest in compiling some statistics from his notes. I’m also curious how much my points of divergence are in line with the sorts of scenarios he sees on the tabletop.

Some people have expressed worry that documents like AI 2027 risk “hyperstitioning” (ie self-fulfilling) a bad future. I agree with the implicit claim that it is very important to not assume that we must race, that we can’t make binding agreements, or that we’re generally helpless. But also, I think it’s generally virtuous to, when making predictions, tell the truth, and the Race future looks far more true to me than the Slowdown future. We might be able to collectively change our minds about that, and abandon the obsession with racing, but that requires common knowledge of why racing is a bad strategy, and I think that documents like this are useful towards that end!