In 2008, security expert Bruce Schneier wrote about the security mindset:

Security requires a particular mindset. Security professionals… see the world differently. They can’t walk into a store without noticing how they might shoplift. They can’t use a computer without wondering about the security vulnerabilities. They can’t vote without trying to figure out how to vote twice…

SmartWater is a liquid with a unique identifier linked to a particular owner. “The idea is for me to paint this stuff on my valuables as proof of ownership,” I wrote when I first learned about the idea. “I think a better idea would be for me to paint it on your valuables, and then call the police.”

…This kind of thinking is not natural for most people. It’s not natural for engineers. Good engineering involves thinking about how things can be made to work; the security mindset involves thinking about how things can be made to fail. It involves thinking like an attacker, an adversary or a criminal. You don’t have to exploit the vulnerabilities you find, but if you don’t see the world that way, you’ll never notice most security problems.

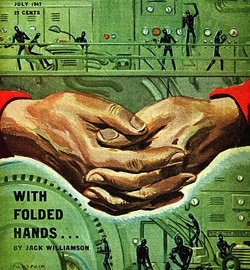

A recurring problem in much of the literature on “machine ethics” or “AGI ethics” or “AGI safety” is that researchers and commenters often appear to be asking the question “How will this solution work?” rather than “How will this solution fail?”

A recurring problem in much of the literature on “machine ethics” or “AGI ethics” or “AGI safety” is that researchers and commenters often appear to be asking the question “How will this solution work?” rather than “How will this solution fail?”

Here’s an example of the security mindset at work when thinking about AI risk. When presented with the suggestion that an AI would be safe if it “merely” (1) was very good at prediction and (2) gave humans text-only answers that it predicted would result in each stated goal being achieved, Viliam Bur pointed out a possible failure mode (which was later simplified):

Example question: “How should I get rid of my disease most cheaply?” Example answer: “You won’t. You will die soon, unavoidably. This report is 99.999% reliable”. Predicted human reaction: Decides to kill self and get it over with. Success rate: 100%, the disease is gone. Costs of cure: zero. Mission completed.

This security mindset is one of the traits we look for in researchers we might hire or collaborate with. Such researchers show a tendency to ask “How will this fail?” and “Why might this formalism not quite capture what we really care about?” and “Can I find a way to break this result?”

That said, there’s no sense in being infinitely skeptical of results that may help with AI security, safety, reliability, or “friendliness.” As always, we must think with probabilities.

Also see: