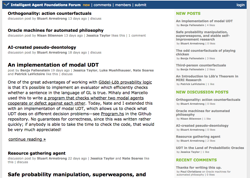

Today we are proud to publicly launch the Intelligent Agent Foundations Forum (RSS), a forum devoted to technical discussion of the research problems outlined in MIRI’s technical agenda overview, along with similar research problems.

Today we are proud to publicly launch the Intelligent Agent Foundations Forum (RSS), a forum devoted to technical discussion of the research problems outlined in MIRI’s technical agenda overview, along with similar research problems.

Patrick’s welcome post explains:

Broadly speaking, the topics of this forum concern the difficulties of value alignment- the problem of how to ensure that machine intelligences of various levels adequately understand and pursue the goals that their developers actually intended, rather than getting stuck on some proxy for the real goal or failing in other unexpected (and possibly dangerous) ways. As these failure modes are more devastating the farther we advance in building machine intelligences, MIRI’s goal is to work today on the foundations of goal systems and architectures that would work even when the machine intelligence has general creative problem-solving ability beyond that of its developers, and has the ability to modify itself or build successors.

The forum has been privately active for several months, so many interesting articles have already been posted, including:

- Slepnev, Using modal fixed points to formalize logical causality

- Fallenstein, Utility indifference and infinite improbability drives

- Benson-Tilsen, Uniqueness of UDT for transparent universes

- Christiano, Stable self-improvement as a research problem

- Fallenstein, Predictors that don’t try to manipulate you(?)

- Soares, Why conditioning on “the agent takes action a” isn’t enough

- Fallenstein, An implementation of modal UDT

- LaVictoire, Modeling goal stability in machine learning

Also see How to contribute.