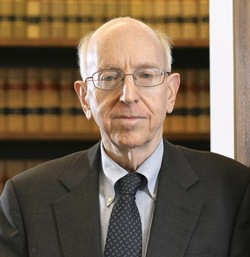

Richard Posner is a jurist, legal theorist, and economist. He is also the author of nearly 40 books, and is by far the most-cited legal scholar of the 20th century.

Richard Posner is a jurist, legal theorist, and economist. He is also the author of nearly 40 books, and is by far the most-cited legal scholar of the 20th century.

In 2004, Posner published Catastrophe: Risk and Response, in which he discusses risks from AGI at some length. His analysis is interesting in part because it appears to be intellectually independent from the Bostrom-Yudkowsky tradition that dominates the topic today.

In fact, Posner does not appear to be aware of earlier work on the topic by I.J. Good (1970, 1982), Ed Fredkin (1979), Roger Clarke (1993, 1994), Daniel Weld & Oren Etzioni (1994), James Gips (1995), Blay Whitby (1996), Diana Gordon (2000), Chris Harper (2000), or Colin Allen (2000). He is not even aware of Hans Moravec (1990, 1999), Bill Joy (2000), Nick Bostrom (1997; 2003), or Eliezer Yudkowsky (2001). Basically, he seems to know only of Ray Kurzweil (1999).

Still, much of Posner’s analysis is consistent with the basic points of the Bostrom-Yudkowsky tradition:

[One class of catastrophic risks] consists of… scientific accidents, for example accidents involving particle accelerators, nanotechnology…, and artificial intelligence. Technology is the cause of these risks, and slowing down technology may therefore be the right response.

…there may some day, perhaps some day soon (decades, not centuries, hence), be robots with human and [soon thereafter] more than human intelligence…

…Human beings may turn out to be the twenty-first century’s chimpanzees, and if so the robots may have as little use and regard for us as we do for our fellow, but nonhuman, primates…

…A robot’s potential destructiveness does not depend on its being conscious or able to engage in [e.g. emotional processing]… Unless carefully programmed, the robots might prove indiscriminately destructive and turn on their creators.

…Kurzweil is probably correct that “once a computer achieves a human level of intelligence, it will necessarily roar past it”…

One major point of divergence seems to be that Posner worries about a scenario in which AGIs become self-aware, re-evaluate their goals, and decide not to be “bossed around by a dumber species” anymore. In contrast, Bostrom and Yudkowsky think AGIs will be dangerous not because they will “rebel” against humans, but because (roughly) using all available resources — including those on which human life depends — is a convergent instrumental goal for almost any set of final goals a powerful AGI might possess. (See e.g. Bostrom 2012.)