Michael Fisher is a professor of Computer Science, specialising in logical methods and automated formal verification, and Director of the multi-disciplinary Centre for Autonomous Systems Technology at the University of Liverpool and member of the Logic and Computation group in the Department of Computer Science.

Michael Fisher is a professor of Computer Science, specialising in logical methods and automated formal verification, and Director of the multi-disciplinary Centre for Autonomous Systems Technology at the University of Liverpool and member of the Logic and Computation group in the Department of Computer Science.

Professor Fisher is also a Fellow of both the BCS and the IET, and a member of the UK Computing Research Committee. He is Chair of the Department’s Industrial Liaison Committee.

Luke Muehlhauser: In “Verifying Autonomous Systems” (2013), you and your co-authors summarize some recent approaches to formally verifying autonomous systems. At one point, you write:

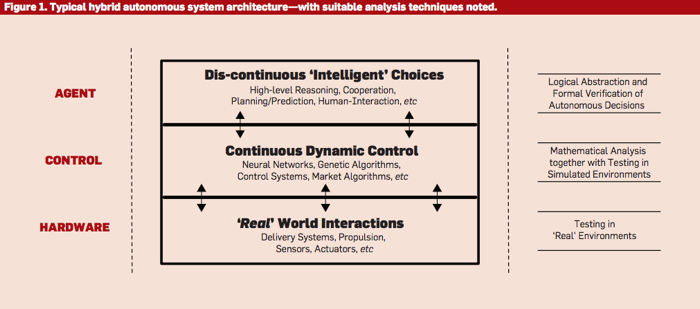

Many autonomous systems, ranging over unmanned aircraft, robotics, satellites, and even purely software applications, have a similar structure, namely layered architectures as summarized in Figure 1. Although purely connectionist/sub-symbolic architectures remain prevalent in some areas, such as robotics, there is a broad realization that separating out the important/difficult choices into an identifiable entity can be very useful for development, debugging, and analysis. While such layered architectures have been investigated for many years they appear increasingly common in autonomous systems.

The accompanying figure is:

What can you say about how common you think something like your “typical hybrid autonomous system architecture” is? E.g. do you think that kind of architecture is in use in ~20 different real-world applications, or ~200 different real-world applications, or perhaps more? Do you think there’s a trend in favor of such architectures? Is there a trend toward such architectures even in robotics, do you think?

Michael Fisher: It is difficult to get definitive evidence about the internal architectures of autonomous systems, especially commercial ones, so I only have anecdotal information about systems constructed in this way. However, from my discussions with engineers, both in academia and industry (aerospace and automotive), it appears that the use of this sort of architecture is increasing. It might not be termed an “agent architecture”, indeed the “decision maker” might not be termed an “agent” at all, but systems with a separate “executive” or “control module” that explicitly deals with high-level decisions, fit into our model.

I am not sure whether robotic architectures are also moving in this direction, but there seems to be a theme of adding a “monitor” or “governor” that can assess the safety or ethics of proposed actions before the robot takes them ((Arkin, R. “Governing Lethal Behavior: Embedding Ethics in a Hybrid Deliberative/Reactive Robot Architecture“. Technical Report GIT-GVU-07-11, Georgia Tech, 2007.)), ((Woodman, R., Winfield, A., Harper, C. and Fraser, M. “Building Safer Robots: Safety Driven Control“. International Journal of Robotic Research 31(13):1603–1626, 2012.)) . This can alternatively be viewed as an additional agent making high-level decisions (what to permit, what to block) and so this approach might also be seen as a variety of hybrid agent architecture.

Luke: In that same paper, you and your co-authors explain that

Since autonomous software has to make its own decision, it is often vital to know not only what the software does and when it does it but also why the software chooses to do it.

…A very useful abstraction for capturing such autonomous behavior within complex, dynamic systems turns out to be the concept of an agent. Since the agent concept came into prominence in the 1980s, there has been vast development within both academia and industry. It has become clear this agent metaphor is very useful for capturing many practical situations involving complex systems comprising flexible, autonomous, and distributed components. In essence, agents must fundamentally be capable of flexible autonomous action.

However, it turns out the “agent” concept on its own is still not enough! Systems controlled by neural networks, genetic algorithms, and complex control systems, among others, can all act autonomously and thus be called agents, yet the reasons for their actions are often quite opaque. Because of this, such systems are very difficult to develop, control, and analyze.

So, the concept of a rational agent has become more popular. Again, there are many variations but we consider this to be an agent that has explicit reasons for making the choices it does, and should be able to explain these if necessary.

I previously explored the relative transparency of different AI methods in Transparency in Safety-Critical Systems, where I asked:

How does the transparency of a method change with scale? A 200-rules logical AI might be more transparent than a 200-node Bayes net, but what if we’re comparing 100,000 rules vs. 100,000 nodes? At least we can query the Bayes net to ask “what it believes about X,” whereas we can’t necessarily do so with the logic-based system.

What are your thoughts on how well the transparency of rational agent models will scale? Or, do you think the “rational agent” part of future autonomous systems will be kept fairly small (e.g. to enable transparency and verification) even as the subsystems at lower “layers” in the architecture may grow massively in complexity?

Michael: I would say that the high-level rules within rational agents remain relatively transparent even as their numbers increase. It’s just that the proof/analysis mechanisms do not scale so well.

So, as you suggest, the intention is to keep the rational agent part as small as possible. Formal verification is quite costly, so any verification of very large agents is likely to be infeasible.

By only dealing with the high-level, rational agent decisions, we aim to avoid handling the vast state-space of the whole system. The use of logical (specifically, BDI) rules abstracts us from much low-level detail.

Concerning your question about “100,000 rules vs. 100,000 nodes”, I would hope that the symbolic logic language used helps us reduce this to 1000, or even 100, rules (vs. 100,000 nodes).

Luke: Does your hierarchical autonomous system approach, with a Belief-Desire-Intention rational agent “on top,” integrate with hybrid system control approaches, or would it be an alternative to hybrid system control approaches?

Michael: Yes, it fits together with hybrid system approaches and, certainly, the continuous and stochastic aspects are important for modelling real-world interactions. You can possibly see our approach as providing further detail about the discrete steps within hybrid systems.

Luke: In the future, do you think the logical agent specification in the top-level role in hierarchical autonomous systems will eventually need to be replaced by a more flexible, probabilistic/fuzzy reasoning approach, so as to be a better match for the environment? Or could a logical agent specification play that role even as we build systems (several decades from now) that approach human-like, fully general reasoning and planning abilities?

Michael: The latter. We are working towards being able to specify and verify probabilistic, and even real-time, agent behaviours at this top level. (Currently we can do either agent verification or probabilistic verification, but we are developing combination mechanisms.) The high-level agent will likely become increasingly complex as the sophistication of applications develop and may well incorporate different reasoning and decision components. Our approach at present is that planning components are within the separate, low-level, modules. The agent just calls these and then makes decisions between the plan options produced.

Luke: Interesting. Could you say a bit more about the combination mechanisms you’re developing?

Michael: Formally verifying basic logical properties is already difficult. However, once we tackle autonomous systems that might well be used in realistic situations, then we are often led to much more complex logical queries. For example, imagine we wish to verify a property such as

when asked, the probability of the robot replying within 30 seconds is at least 80%

Already we have moved beyond basic properties to incorporate both probabilities (>80%) and real-time aspects (within 30s). But, with autonomous systems we would like to verify not just what the system (e.g. a robot) does but what its intentions and beliefs are. So we might even want to verify

when asked, the probability of the robot believing the request has been made and then intending to reply within 30 seconds is at least 80%

The problem here is that the logical framework we need to describe such requirements is already quite complex. Combinations of logics are often difficult to deal with and combinations of logics of probability, real-time, intention, belief, etc., have not yet been studied in great detail.

We are aiming to develop and extend some theoretical work we have been doing on model checking of complex logical combinations in order to tackle this problem of more comprehensive autonomous system verification.

Luke: Thanks, Michael!