MIRI recently sponsored Oxford researcher Stuart Armstrong to take a solitary retreat and brainstorm new ideas for AI control. This brainstorming generated 16 new control ideas, of varying usefulness and polish. During the past month, he has described each new idea, and linked those descriptions from his index post: New(ish) AI control ideas.

MIRI recently sponsored Oxford researcher Stuart Armstrong to take a solitary retreat and brainstorm new ideas for AI control. This brainstorming generated 16 new control ideas, of varying usefulness and polish. During the past month, he has described each new idea, and linked those descriptions from his index post: New(ish) AI control ideas.

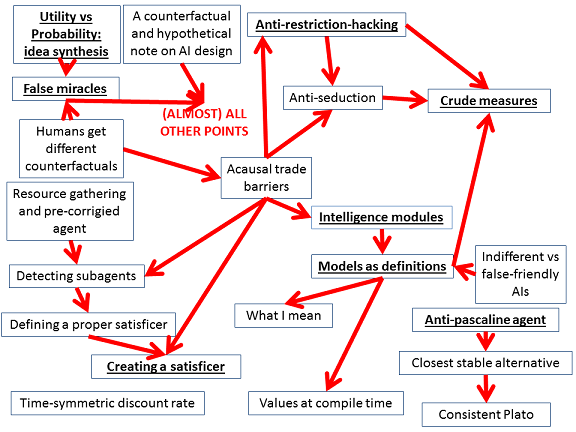

He also named each AI control idea, and then drew a picture to represent (very roughly) how the new ideas related to each other. In the picture below, an arrow Y→X can mean “X depends on Y”, “Y is useful for X”, “X complements Y on this problem” or “Y inspires X.” The underlined ideas are the ones Stuart currently judges to be most important or developed.

Previously, Stuart developed the AI control idea of utility indifference, which plays a role in MIRI’s paper Corrigibility (Stuart is a co-author). He also developed anthropic decision theory and some ideas for reduced impact AI and oracle AI. He has contributed to the strategy and forecasting challenges of ensuring good outcomes from advanced AI, e.g. in Racing to the Precipice and How We’re Predicting AI — or Failing To. MIRI previously contracted him to write a short book introducing the superintelligence control challenge to a popular audience, Smarter Than Us.