Laurent Orseau is an associate professor (maître de conférences) since 2007 at AgroParisTech, Paris, France. In 2003, he graduated from a professional master in computer science at the National Institute of Applied Sciences in Rennes and from a research master in artificial intelligence at University of Rennes 1. He obtained his PhD in 2007. His goal is to build a practical theory of artificial general intelligence. With his co-author Mark Ring, they have been awarded the Solomonoff AGI Theory Prize at AGI’2011 and the Kurzweil Award for Best Idea at AGI’2012.

Laurent Orseau is an associate professor (maître de conférences) since 2007 at AgroParisTech, Paris, France. In 2003, he graduated from a professional master in computer science at the National Institute of Applied Sciences in Rennes and from a research master in artificial intelligence at University of Rennes 1. He obtained his PhD in 2007. His goal is to build a practical theory of artificial general intelligence. With his co-author Mark Ring, they have been awarded the Solomonoff AGI Theory Prize at AGI’2011 and the Kurzweil Award for Best Idea at AGI’2012.

Luke Muehlhauser: In the past few years you’ve written some interesting papers, often in collaboration with Mark Ring, that use AIXI-like models to analyze some interesting features of different kinds of advanced theoretical agents. For example in Ring & Orseau (2011), you showed that some kinds of advanced agents will maximize their rewards by taking direct control of their input stimuli — kind of like the rats who “wirehead” when scientists give them direct control of the input stimuli to their reward circuitry (Olds & Milner 1954). At the same time, you showed that at least one kind of agent, the “knowledge-based” agent, does not wirehead. Could you try to give us an intuitive sense of why some agents would wirehead, while the knowledge-based agent would not?

Laurent Orseau: You’re starting with a very interesting question!

This is because knowledge-seeking has a fundamental distinctive property: On the contrary to rewards, knowledge cannot be faked by manipulating the environment. The agent cannot itself introduce new knowledge in the environment because, well, it already knows what it would introduce, so it’s not new knowledge. Rewards, on the contrary, can easily be faked.

I’m not 100% sure, but it seems to me that knowledge seeking may be the only non-trivial utility function that has this non-falsifiability property. In Reinforcement Learning, there is an omnipresent problem called the exploration/exploitation dilemma: The agent must both exploit its knowledge of the environment to gather rewards, and explore its environment to learn if there are better rewards than the ones it already knows about. This implies in general that the agent cannot collect as many rewards as it would like.

But for knowledge seeking, the goal of the agent is to explore, i.e., exploration is exploitation. Therefore the above dilemma collapses to doing only exploration, which is the only meaningful unified solution to this dilemma (the exploitation-only solution leads either to very low rewards or is possible only when the agent already has knowledge of its environment, as in dynamic programming). In more philosophical words, this unifies epistemic rationality and instrumental rationality.

Note that the agent introduced in Orseau & Ring (2011), and better developed in Orseau (2011) where a convergence proof is given, works actually only for deterministic environments. Its problem is that it may consider noise as information, and get addicted to it, i.e., it may stare at a detuned TV screen forever. And one could well consider this as “self-delusion”.

Fortunately, with Tor Lattimore and Marcus Hutter, we are finalizing a paper for ALT 2013 where we considered all computable stochastic environments. This new agent does not have the defective behavior of the 2011 agent, and I think it would even have a better behavior even in deterministic environments. For example, it would (it seems) not focus for too long on the same source of information, and may from time to time get back to explore the rest of the environment before eventually coming back to the original source; i.e., it is not a monomaniac agent.

A side note: If you (wrongly) understand knowledge-seeking as learning to predict all possible futures, then a kind of self-delusion may be possible: The agent might just jump into a trap, where all its observations would be the same whatever its actions, and it would thus have converged to optimal prediction. But we showed that the knowledge-seeking agent would give no value to such actions.

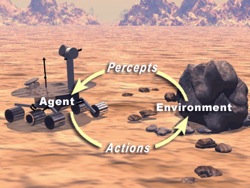

Luke: In two other papers, you and Mark Ring addressed a long-standing issue in AI: the naive Cartesian dualism of the ubiquitous agent-environment framework. Could you explain why the agent-environment framework is Cartesian, and also what work you did in those two papers?

Laurent: In the traditional agent framework, we consider that the agent interacts with its environment by sending, at each interaction cycle, an action that the environment can take into account to produce an observation, that the agent can in turn take into account to start the next interaction cycle and output a new action. This framework is very useful in practice because it avoids a number of complications of the real life. Those complications are exactly what we wanted to address head on. Because at some point you need to pull your head out of the sand and start dealing with the complex but important issues. But certainly many people, and in particular people working with robots, are quite aware that the real world is not a dualist framework. So in a sense, it was an obvious thing to do, especially because it seems that no-one had done it before, at least from this point of view and to the best of our knowledge.

Laurent: In the traditional agent framework, we consider that the agent interacts with its environment by sending, at each interaction cycle, an action that the environment can take into account to produce an observation, that the agent can in turn take into account to start the next interaction cycle and output a new action. This framework is very useful in practice because it avoids a number of complications of the real life. Those complications are exactly what we wanted to address head on. Because at some point you need to pull your head out of the sand and start dealing with the complex but important issues. But certainly many people, and in particular people working with robots, are quite aware that the real world is not a dualist framework. So in a sense, it was an obvious thing to do, especially because it seems that no-one had done it before, at least from this point of view and to the best of our knowledge.

The traditional framework is dualist in the sense that it considers that the “mind” of the agent (the process with which the agent chooses its actions) lies outside of the environment. But we all know that if we ever program an intelligent agent on a computer, this program and process will not be outside of the world, they will be a part of it and, even more importantly, computed by it. This led us to define our space-time embedded intelligence framework and equation.

Put simply, the idea is to consider an (realistic) environment, and a memory block of some length on some computer or robot in this environment. Then what is the best initial configuration of the bits on this memory block according to some measure of utility upon the expected future history?

Some people worry that this is too general (in particular if you just consider some block of bits in an environment, not necessarily on a computer) and that we lose the essence of agency, which is to deal with inputs and outputs. But they forget that a) this systemic framework does also allow for defining stones (simply ignore the inputs and output a constant value) and b) this is how the real world is: If an AGI can duplicate itself and split itself in so many parts on many computers, robots and machines, how can we really identify this agent as a single systemic entity?

Some other people worry about how this framework could be used in practice, and that it is too difficult to deal with. Our goal was not to define a framework where theorems are simple to prove and algorithms simple to write, but to define a framework for AGI that is closer to the real world. If the latter is difficult to deal with, then so be it. But don’t blame the framework, blame the real world. Anyway, we believe there are still interesting things to do with this framework. And I believe it is at least still useful to help people not forget that the real world is different from the usual text book simplifications. This is probably not very relevant for applied machine learning and narrow AI research, but I believe it is very important for AGI research.

However, let me get one thing straight: Even in the traditional framework, an agent can still predict that it may be “killed” (in some sense), for example if an anvil falls on its body. This is possible if the body of the agent, excluding the brain but including its sensors and effectors, are considered to be part of the environment: The agent can then predict that the anvil will destroy them and it will be unable to get any information and reward from and perform any action to the environment. Whenever we consider our skull (or rather, the skull of the robot) and brain to always be unbreakable, non-aging, and not subject to drugs, alcohol and external events such as heat, accelerations and magnetic waves, we can quite safely use the traditional framework.

But remove one of these hypotheses and the way the agent computes may become different from what it assumes it would be, hence leading to different action selection schemes. Regarding artificial agents, tampering with a source code is even easier than with a human brain. AGIs of the future will probably face a gigantic number of cracking and modification attempts, and the agent itself and its designers should be well aware that this source code and memory are not in a safe dualist space. In the “Memory issues of intelligent agents” paper, we considered various consequences of giving the environment the possibility to read and write the agent’s memory of the interaction history. It appears difficult for the agent to be aware of such modifications in general. We must not forget that amnesia happens for people, and it may happen for robots too, e.g. after a collision. And security by obfuscation can only delay memory hackers, even when considering a natural brain. Another interesting consequence is that deterministic policies cannot be always optimal, on the contrary to the optimal and deterministic AIXI in the dualist framework.

Luke: Do you think the AIXI framework, including the limited but tractable approximations like MC-AIXI, provides a plausible path toward real-world AGI? If not, what do you see as its role in AGI research?

Laurent: Approximating AIXI can be done in very many ways. The main ideas are building/finding good and simple models of the environment, and performing some planning on these models; i.e., it is a model-based approach (by contrast to Q-learning which is model-free for example: it does model the environment, but only learns to predict the expected rewards per action/state). This is a very common approach in reinforcement learning, because some may argue that model-free methods are “blind”, in the sense that they don’t learn about their environments, they just “know” what to do. Another important component of AIXI is the interaction history (instead of a state-based observation), and approximations may need to deal appropriately with compressing this history, possibly with loss. Hutter is working on this aspect with feature RL, with nice results. So yes, approximating AIXI can be seen as a very plausible way toward real-world AGI.

Finding computation-efficient approximations is not an easy task, and it will quite probably require a number of neat ideas that will make it feasible, but it’s certainly a path worth researching. However, personally, I prefer to think that the agent must learn how to model its environment, which is something deeper than a model-based approach.

Even without considering AIXI approximations, AIXI still is very important for AGI research because it unifies all important properties of cognition, like agency (interaction with an environment), knowledge representation and memory, understanding, reasoning, goals, problem solving, planning and action selection, abstraction, generalization without overfitting, multiple hypotheses, creativity, exploration and curiosity, optimization and utility maximization, prediction, uncertainty, with incremental, on-line, lifelong, continual learning in arbitrarily complex environments, without a restart state, no i.i.d. or stationarity assumption, etc. and does all this in a very simple, elegant and precise manner. I believe that if one does not understand what tour de force AIXI is, one cannot seriously hope to tackle the AGI problem. People tend to think that simple ideas are easy to find because they are easy to read or be explained orally. But they tend to forget that easy to read does not mean easy to grok, and certainly not easy to find! Simplest ideas are the best ones, especially in research.

Luke: You speak of AIXI-related work as a pretty rich subfield with many interesting lines of research to pursue that are related to universal agents. Do you think there are other AGI-related lines of inquiry that are as promising or productive as AIXI-related work? For example Schmidhuber’s Gödel machine, the SOAR architecture, etc.?

Laurent: I can’t really say much about the cognitive architectures. It looks very difficult to say whether such a design would work correctly autonomously over several decades. It’s interesting work with nice ideas, but I can’t see what kind of confidence I could have in such designs when regarding long-term AGI. That’s why I prefer simple and general approaches, formalized with some convergence proof or a proof of another important property, and that can give you confidence that your design will work for more than a few days ahead.

Regarding the Gödel machine (GM), I do think it’s a very nice design, but I have two griefs with it. The first one is that it’s currently not sufficiently formalized, so it’s difficult to state if and how it really works. The second one is because it’s relying on a automated theorem prover. Searching for proofs is extremely complicated: Making a parallel with Levin-search (LS), where given a goal output string (an improvement in the GM), you enumerate programs (propositions in the GM) and run them to see if they output the goal string (search for a proof of improvement in GM). This last part is the problem: in LS, the programs are fast to run, whereas in GM there is an additional search step for each proposition, so this looks very roughly like going from exponential (LS) to double-exponential (GM). And LS is already not really practical.

Theorem proving is even more complicated when you need to prove that there will be an improvement of the system at an unknown future step. Maybe it would work better if the kinds of proofs were limited to some class, for example use simulation of the future steps up to some horizon given a model of the world. These kinds of proofs are easier to check and have a guaranteed termination, e.g. if the model class for the environment is based on Schmidhuber’s Speed Prior. But this starts to look pretty much like an approximation of AIXI, doesn’t it?

Reinforcement Learning in general is very promising for AGI: Even though most researchers of this field are not directly interested in AGI, they still try to find the most general possible methods while remaining practical. It’s far more obvious in RL than in the rest of Machine Learning.

But I think there are many lines of research that have could be pushed toward some kind of AGI level. In machine learning, some fields like Genetic Programming, Inductive Logic Programming, Grammar Induction and −let’s get bold− why not Recurrent Deep Neural Networks (probably with an additional short-term memory mechanism of some sort), and possibly other research fields, are all based on some powerful induction schemes that could well lead to thinking machines if researchers in those fields wanted to. Schmidhuber’s OOPS is also a very interesting design, based on Levin Search. It is limited in that it cannot truly reach the learning-to-learn level, but could be extended with a true probability distribution over programs, as described by Solomonoff. And there is of course also the neuroscience way of trying to understand or at least model the brain.

Luke: Thanks, Laurent!