Momentum is picking up in the domain of AI safety engineering. MIRI needs to grow fast if it’s going to remain at the forefront of this new paradigm in AI research. To that end, we’re kicking off our 2015 Summer Fundraiser!

Rather than naming a single funding target, we’ve decided to lay out the activities we could pursue at different funding levels and let you, our donors, decide how quickly we can grow. In this post, I’ll describe what happens if we hit our first two fundraising targets: $250,000 (“continued growth”) and $500,000 (“accelerated growth”).

Continued growth

Over the past twelve months, MIRI’s research team has had a busy schedule — running workshops, attending conferences, visiting industry AI teams, collaborating with outside researchers, and recruiting. We’ve presented papers at two AAAI conferences and an AGI conference, attended FLI’s Puerto Rico conference, written four papers that have been accepted for publication later this year, produced around ten technical reports, and posted a number of new preliminary results to the Intelligent Agent Foundations Forum.

That’s what we’ve been able to do with a three-person research team. What could MIRI’s researchers accomplish with a team two or three times as large?

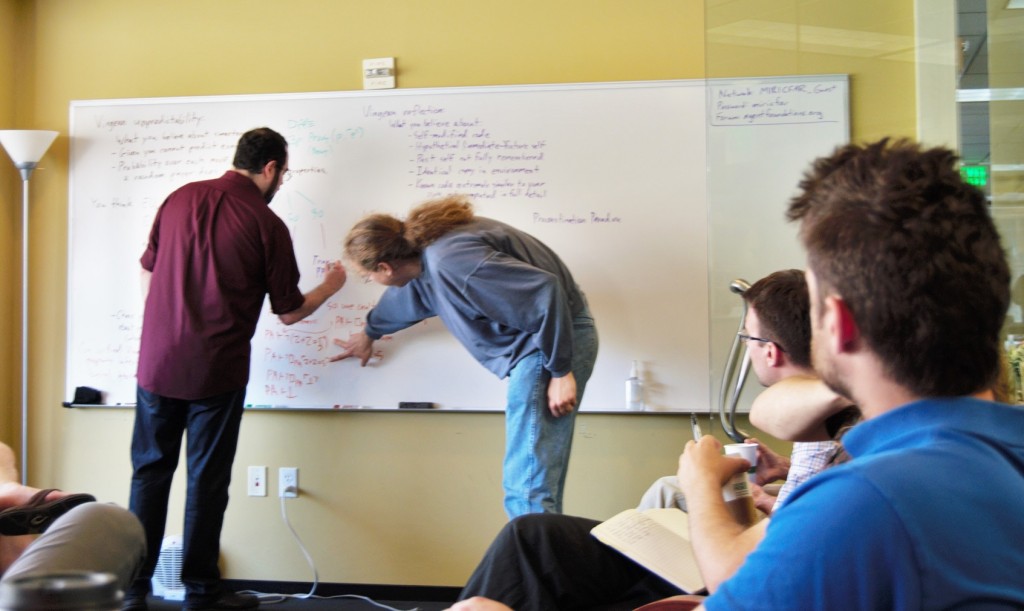

MIRI research fellows Eliezer Yudkowsky and Benja Fallenstein discuss

reflective reasoning at one of our July workshops.

Thanks to a few game-changing donations and grants from the last few years, we have the funds to hire several new researchers: Jessica Taylor will be joining our full-time research team on August 1, and we’ll be taking on another new research fellow on September 1. That will bring our research team up to five, and leave us with about one year of runway to continue all of our current activities.

To grow even further — especially if we grow as quickly as we’d like to — we’ll need more funding.

Our activities this summer have been aimed at further expanding our research team. The MIRI Summer Fellows program, designed in collaboration with the Center for Applied Rationality to teach mathematicians and AI researchers some AI alignment research skills, is currently underway; and in three weeks, we’ll be running the fifth of six summer workshops aimed at bringing our research agenda to a wider audience.

Hitting our first funding target, $250,000, would allow us to hire the very best candidates from our summer workshops and fellows program. That money would allow us to hire 1–3 additional researchers without shortening our runway, bringing the total size of the research team up to 6–8.

Accelerated growth

Growing to a team of eight, though, still isn’t nearing the limit of how fast we could be growing this year, if we really hit the accelerator. This is a critical juncture for the field of AI alignment, and we have a rare opportunity to help set the agenda for the coming decades of AI safety work. Our donors tend to be pretty ambitious, so it’s worth asking:

How fast could we grow if MIRI had its largest fundraiser ever?

If we hit our $250,000 funding target before the end of August, it will be time to start thinking big. Our second funding target, $500,000, would allow us to recruit even more aggressively, and expand to a roughly ten-person core research team. This is about as large as we think we can grow in the near term without a major increase in our recruitment efforts.

Ten researchers would massively enhance our ability to polish our new results into peer-reviewed publications, attend conferences, and run workshops. But, most crucially of all, it would give us vastly more time to devote to basic research. We have identified dozens of open problems that appear relevant to the task of aligning smarter-than-human AI with the right values; with a larger team, our hope is to start solving those problems quickly.

But growing the core research team as fast as is sustainable in the short term is not all we could be doing: in fact, there are a number of other projects waiting on the sidelines.

Because researchers at MIRI have been thinking about the AI alignment problem for a long time, we have quite a few projects which we intend to work on as soon as we have the necessary time and funding. At the $500,000 level, we can start executing on several of the highest-priority projects without diverting attention away from our core technical agenda.

Project: Type theory in type theory. There are a number of tools missing in modern-day theorem-provers that would be necessary in order to study certain types of self-referential reasoning. ((Roughly speaking, in order to study self-reference in a modern dependently typed programming language, we need to be able to write programs that can manipulate and typecheck quoted programs written in the same language. This would be a ripe initial setting in which to study programs that reason about themselves with high confidence. However, to our knowledge, nobody has yet written (e.g.) the type of Agda programs in Agda — though there have been some promising first steps.)) Given the funding, we would hire one or two type theorists to work on developing relevant tools full-time.

Our type theory project would enable us to study programs reasoning about similar programs in a concrete setting — lines of code, as opposed to equations on whiteboards. The tools developed could also be quite useful to the type theory community, as they would have a number of short-term applications. (E.g., for building modular and highly secure operating systems where a verifier component can verify that it is OK to swap out the verifier for a more powerful verifier.) We would benefit from the input from specialists in type theory, we would benefit from the tools, and we would also benefit from increased engagement with the larger type theory community, which is an important community when it comes to writing highly reliable computer programs.

Project: Visiting scholar program. Another project we can quickly implement given sufficient funding is a visiting scholar program. One surefire way to increase our engagement with the academic community would be to have interested professors drop by for the summer, while we pay their summer salaries and work with them on projects where our interests overlap. These sorts of visits, combined with a few visiting graduate students or postdocs each summer, would likely go a long way towards getting academic communities more involved with the open problems that we think are most urgent, while also giving us the opportunity to get input from some of the top minds in the modern field of AI.

A program such as this could be extended into one where MIRI directly supports graduate students and/or professors who are willing to spend some portion of their time on AI alignment research. This program could also prove useful if MIRI reaches the point of forming new research groups specializing in approaches to the AI alignment challenge other than the one described in our technical agenda. Those extensions of the program would require significantly more time and funding, but at the $500,000 level we could start sowing the seeds.

MIRI’s future shape

That’s only a brief look at the new activities we could take on with appropriate funding. More detailed exposition is forthcoming, both about the state of our existing projects and about the types of projects we are prepared to execute on given the opportunity. Stay tuned for future posts!

If these plans have already gotten you excited, then you can help make them a reality by contributing to our summer fundraiser. There is much to be done in the field of AI alignment, and both MIRI and the wider field of AI will be better off if we can make real progress as early as possible. Future posts will discuss not only the work we want to branch out into, but also reasons why we think that this is an especially critical period in the history of the field, and why we expect that giving now will be much more valuable than waiting to give later.

Until then, thank you again for all of your support — our passionate donor base is what has enabled us to grow as much as we have over the last few years, and we feel privileged to have this chance to realize the potential our supporters have seen in MIRI.