MIRI Grad Student Seminar

Fall 2016

Time: 5:30-7:00pm on Thursdays

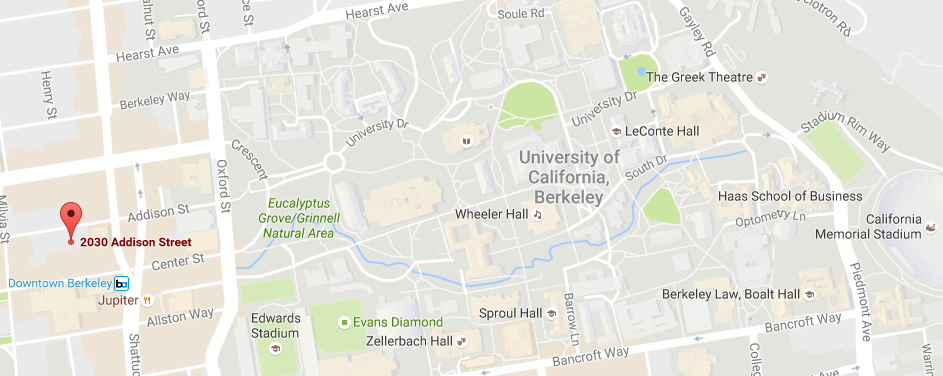

Location: 2030 Addison Street, 7th Floor, Berkeley at MIRI office, near Downtown Berkeley BART

Audience: math/stats/CS/logic grad students and above, or equivalent

Food: Free dinner is provided.

This seminar will cover two separate themes, Agent Foundations (AF) and Alignment for Advanced Machine Learning Agents (AAMLS), described below. It is hosted at the edge of the UC Berkeley campus at MIRI, an independent math/CS research institute that investigates questions about the nature of intelligent agents and how to align their values with human interests.

MIRI currently employs six research fellows, including three mathematics post-docs, and our hope is that this seminar will help us forge new collaborations and identify potential future hires as our institute grows.

| Date | Theme | Title | Speaker | Abstract |

| September 15 | AF | Logical induction: Assigning probabilities to unproven logical statements using Brouwer’s fixed point theorem video, slides 1, slides 2 |

Andrew Critch MIRI |

Is there some principled way to assign probabilities to conjectures written in PA or ZFC before they are proven or disproven? We introduce a new algorithm for doing this, which satisfies a criterion we call Garrabrant induction that has many nice asymptotic consequences. In particular, the algorithm learns to “trust itself” in that it assigns high probability to its own predictions being accurate (it can refer to itself because ZFC and PA can talk about algorithms). It also assigns high probabilities to provable statements much faster than proofs can be found for them, as long as the statements are easy to write down. More generally, the algorithm was developed as a candidate model for defining “good reasoning” when computational resources are limited. This talk will given an overview of the algorithm, the Garrabrant induction criterion, and its currently known implications. In subsequent weeks, I will give a more thorough exposition over a course of several more talks. |

| September 22 | – | No talk | – | – |

| September 29 | AF | Mini-workshop on assigning probabilities to mathematical claims using Brouwer | Tsvi Benson‑Tilsen UC Berkeley |

After a 15 minute intro to Garrabrant induction (including an algorithm that uses Brouwer’s fixed point theorem to assign probabilities to theorems before they’re proven), we’ll split into smaller working groups lead by the paper authors, based on interest in: the algorithm construction, proofs of its various properties, open problems, applications to other areas like game theory / decision theory, or other topics that folks want to think more about in smaller groups. |

| October 6 | AAMLS | Incentives and Interruptions | Patrick LaVictoire MIRI |

When an AI starts acting in unwanted ways, we will want to safely shut it down and correct the issue. For most reward functions, there is an obvious incentive for an agent to avoid being shut down and modified: the original reward function will be better optimized if the original agent persists than if a modified agent optimizes a different reward function. We will discuss several proposals for averting this incentive, including Safely Interruptible Agents by Orseau and Armstrong. |

| October 13 | – | No talk | – | – |

| October 20 | AAMLS | Using “mild” optimization strategies to avoid Goodhart’s law | Jessica Taylor MIRI |

When a measure becomes a target, it ceases to be a good measure. In the case of artificial intelligence, an objective function that is generally a good measure of what the operators value (such as getting a high score in a computer game) often ceases to be such a good measure when it is optimized aggressively (such as in the case of exploiting bugs in the computer game). How might we design optimization methods that avoid this problem? |

Agent Foundations (AF) Topics

For a more detailed overview of these topics, see “Agent Foundations for Aligning Machine Intelligence with Human Interests: A Technical Research Agenda”.

- Logical uncertainty: How can an algorithm A coherently assign heuristic “probabilities” to mathematical statements φ that are not yet proven or disproven, based on our computationally limited exploration of our conjectures thus far? A satisfactory solution to this problem might follow from some recent progress.

- Algorithmic cooperation: Recent work on the cooperation of algorithms whose source codes are transparent to each other has found cooperative fixed-points / equilibria using a new and computationally bounded variant of Godel’s second incompleteness theorem. It remains an open problem to achieve these cooperative equilibruia for algorithms reasoning with non-Boolean levels of uncertainty about each other.

- Logical counterfactuals: When an algorithm A makes a “decision” A(x)=y, in the process it might examine outputting “alternatives” A(x) = y’ ≠ y, which is in fact a mathematical impossibility since A(x) is deterministic given its input. Can we define a systematic way for optimization algorithms to reason in generality about such counterfactuals that is useful and does does not lead immediately to trivial contradictions?

- Naturalized induction: How can an algorithm A represent a model of itself and the world containing it as a computation W(), and be viewed as reasoning about and optimizing the state of that larger program W?

Alignment for Advanced Machine Learning Systems (AAMLS) Topics

For a more detailed overview of these topics, see “New paper: Alignment for advanced machine learning systems”.

- Inductive ambiguity identification: How can we train ML systems to detect and notify us of cases where the classification of test data is highly under-determined from the training data?

- Robust human imitation: How can we design and train ML systems to effectively imitate humans who are engaged in complex and difficult tasks?

- Informed oversight: How can we train a reinforcement learning system to take actions that aid an intelligent overseer, such as a human, in accurately assessing the system’s performance?

- Generalizable environmental goals: How can we create systems that robustly pursue goals defined in terms of the state of the environment, rather than defined directly in terms of their sensory data?

- Conservative concepts: How can a classifier be trained to develop useful concepts that exclude highly atypical examples and edge cases?

- Impact measures: What sorts of regularizers incentivize a system to pursue its goals with minimal side effects?

- Mild optimization: How can we design systems that pursue their goals “without trying too hard”—stopping when the goal has been pretty well achieved, as opposed to expending further resources searching for ways to achieve the absolute optimum expected score?

- Averting instrumental incentives: How can we design and train systems such that they robustly lack default incentives to manipulate and deceive their operators, compete for scarce resources, etc.?