Thanks to Peter Thiel, every donation made to MIRI between now and January 15th, 2014 will be matched dollar-for-dollar!

Also, gifts from “new large donors” will be matched 3x! That is, if you’ve given less than $5k to SIAI/MIRI ever, and you now give or pledge $5k or more, Thiel will donate $3 for every dollar you give or pledge.

We don’t know whether we’ll be able to offer the 3:1 matching ever again, so if you’re capable of giving $5k or more, we encourage you to take advantage of the opportunity while you can. Remember that:

- If you prefer to give monthly, no problem! If you pledge 6 months of monthly donations, your full 6-month pledge will be the donation amount to be matched. So if you give monthly, you can get 3:1 matching for only $834/mo (or $417/mo if you get matching from your employer).

- We accept Bitcoin (BTC) and Ripple (XRP), both of which have recently jumped in value. If the market value of your Bitcoin or Ripple is $5k or more on the day you make the donation, this will count for matching.

- If your employer matches your donations at 1:1 (check here), then you can take advantage of Thiel’s 3:1 matching by giving as little as $2,500 (because it’s $5k after corporate matching).

Please email malo@intelligence.org if you intend on leveraging corporate matching or would like to pledge 6 months of monthly donations, so that we can properly account for your contributions towards the fundraiser.

Thiel’s total match is capped at $250,000. The total amount raised will depend on how many people take advantage of 3:1 matching. We don’t anticipate being able to hit the $250k cap without substantial use of 3:1 matching — so if you haven’t given $5k thus far, please consider giving/pledging $5k or more during this drive. (If you’d like to know the total amount of your past donations to MIRI, just ask malo@intelligence.org.)

$0

$62.5K

$125K

$187.5k

$250k

We have reached our matching total of $250,000!

Now is your chance to double or quadruple your impact in funding our research program.

Accomplishments Since Our July 2013 Fundraiser Launched:

- Held three research workshops, including our first European workshop.

- Talks at MIT and Harvard, by Eliezer Yudkowsky and Paul Christiano.

- Yudkowsky is blogging more Open Problems in Friendly AI… on Facebook! (They’re also being written up in a more conventional format.)

- New papers: (1) Algorithmic Progress in Six Domains; (2) Embryo Selection for Cognitive Enhancement; (3) Racing to the Precipice; (4) Predicting AGI: What can we say when we know so little?

- New ebook: The Hanson-Yudkowsky AI-Foom Debate.

- New analyses: (1) From Philosophy to Math to Engineering; (2) How well will policy-makers handle AGI? (3) How effectively can we plan for future decades? (4) Transparency in Safety-Critical Systems; (5) Mathematical Proofs Improve But Don’t Guarantee Security, Safety, and Friendliness; (6) What is AGI? (7) AI Risk and the Security Mindset; (8) Richard Posner on AI Dangers; (9) Russell and Norvig on Friendly AI.

- New expert interviews: Greg Morrisett (Harvard), Robin Hanson (GMU), Paul Rosenbloom (USC), Stephen Hsu (MSU), Markus Schmidt (Biofaction), Laurent Orseau (AgroParisTech), Holden Karnofsky (GiveWell), Bas Steunebrink (IDSIA), Hadi Esmaeilzadeh (GIT), Nick Beckstead (Oxford), Benja Fallenstein (Bristol), Roman Yampolskiy (U Louisville), Ben Goertzel (Novamente), and James Miller (Smith College).

- With Leverage Research, we held a San Francisco book launch party for James Barratt’s Our Final Invention, which discusses MIRI’s work at length. (If you live in the Bay Area and would like to be notified of local events, please tell malo@intelligence.org!)

How Will Marginal Funds Be Used?

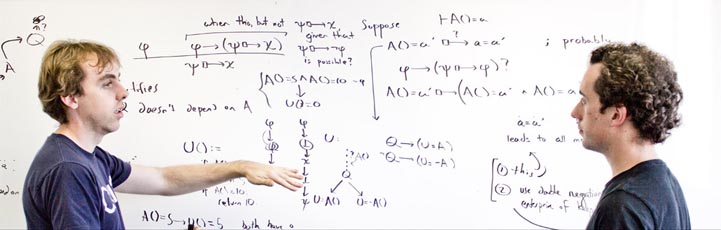

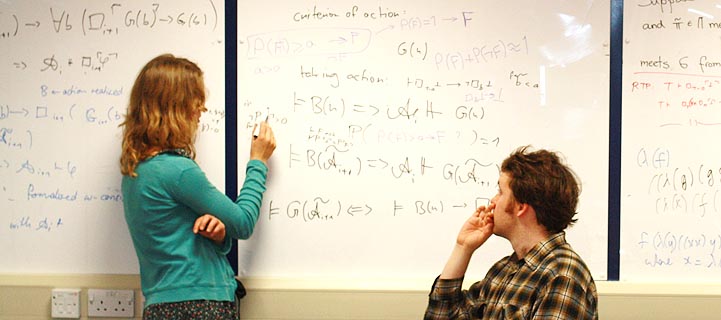

- Hiring Friendly AI researchers, identified through our workshops, as they become available for full-time work at MIRI.

- Running more workshops (next one begins Dec. 14th), to make concrete Friendly AI research progress, to introduce new researchers to open problems in Friendly AI, and to identify candidates for MIRI to hire.

- Describing more open problems in Friendly AI. Our current strategy is for Yudkowsky to explain them as quickly as possible via Facebook discussion, followed by more structured explanations written by others in collaboration with Yudkowsky.

- Improving humanity’s strategic understanding of what to do about superintelligence. In the coming months this will include (1) additional interviews and analyses on our blog, (2) a reader’s guide for Nick Bostrom’s forthcoming Superintelligence book, and (3) an introductory ebook currently titled Smarter Than Us.

Other projects are still being surveyed for likely cost and impact.

We appreciate your support for our work! Donate now, and seize a better than usual chance to move our work forward. If you have questions about donating, please contact Louie Helm at (510) 717-1477 or louie@intelligence.org.